The ultimate guide for A/B testing email campaigns

•

June 30, 2024

If you think your emails as good as they can possibly be, think again.

There are so many variables that can affect the success of your marketing emails and how prospects engage with them - from subject lines to formatting, messaging to calls-to-action (CTA). It’s virtually impossible to land on the winning formula straight away!

But that’s where A/B testing comes in. In this blog, we’ll explain how A/B email testing works in marketing, variables you should consider testing, and how to make sure your tests deliver actionable results.

What is A/B testing in email marketing?

A/B testing, also known as split testing, is when you send two versions of an email to different segments of your audience.

You change one element at a time (like the subject line or call-to-action) to see which version performs better.

Essentially, it helps you figure out what resonat.es with your audience to improve engagement with your email campaigns

Why should marketers care about A/B testing?

A/B testing is crucial because it provides data-driven insights into what works and what doesn't in your email campaigns.

This helps you fine-tune your strategies and achieve better results. Instead of guessing, you can rely on real data to make informed decisions, leading to higher open rates, click-through rates, and conversions.

If you don’t A/B test, you could be missing out on easy fixes that would make big differences to your engagement metrics!

3 key steps of A/B testing

1. Choose your variables

Start simple: decide which elements you want to test based on your goals.

For example, if you want to increase open rates, focus on subject lines. If you aim to boost click-through rates, test your call-to-action buttons.

Look at past data to see where improvements are needed and begin by focusing on variables that could have the most significant impact.

2. Define your sample size

Your sample size matters. Too small, and your results might not be reliable.

Try using an online calculator to determine the right sample size for your tests. Then, split your audience evenly between the versions you're testing to ensure fair results.

3. Check your results

After running your test, focus on the metrics that are most relevant to your goals, like open rates or click-through rates.

Your results are only actionable if they differences between your test audiences are statistically significant to be actionable - that is, they have to be different enough to prove your variable made a difference!

You can use an online tool to check this, too.

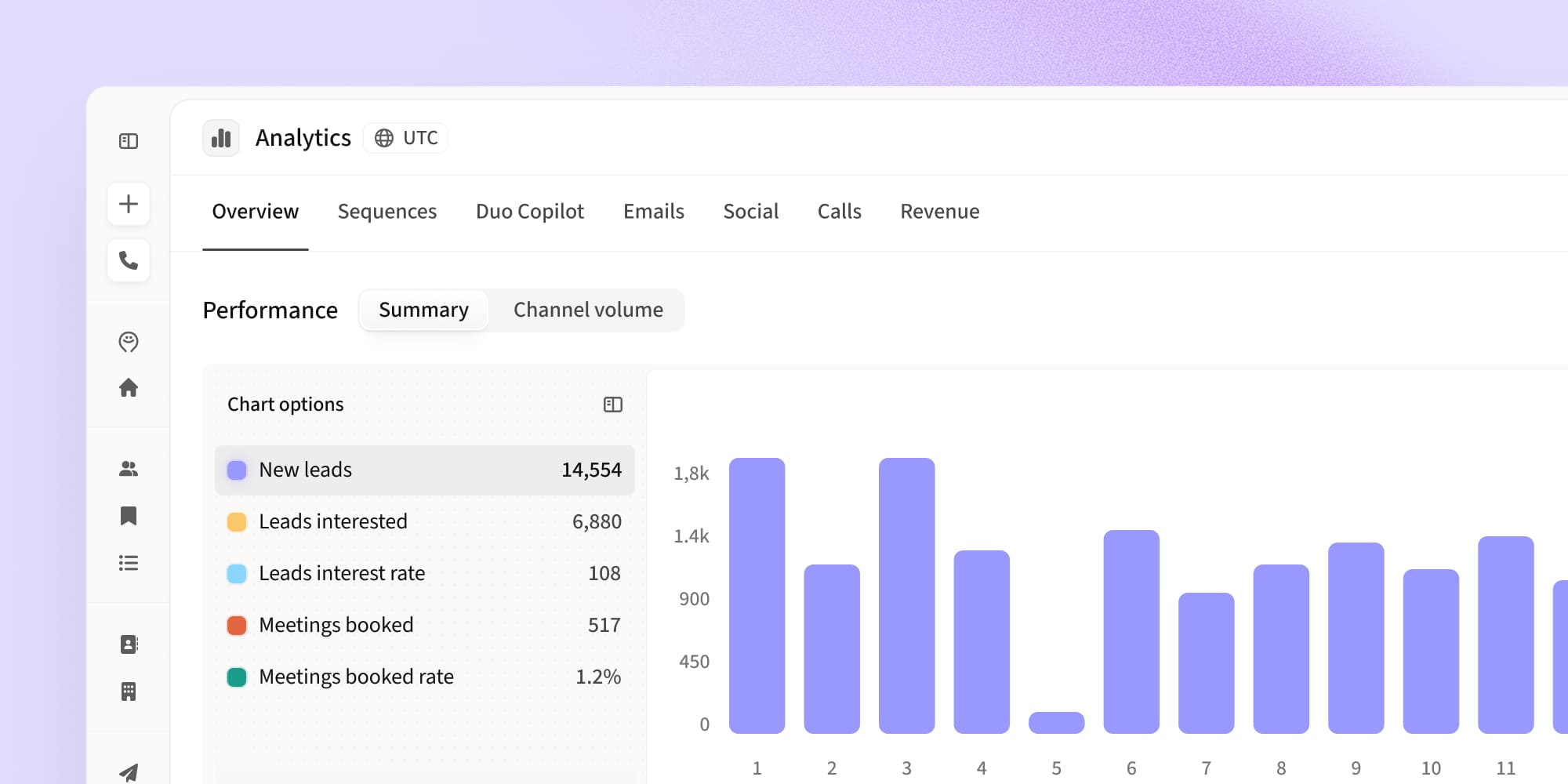

Take a look at the example below.

Say you decide to test two different calls-to-action, with a baseline of 35% (the average reply rate of your email campaigns), a minimum detectable effect of 30%, and a statistical significance of 95%.

According to our online calculator, your sample size needs to be 170. This means you have to send 170 emails for each call-to-action and analyze the results.

After testing both calls-to-action, imagine these were the results of your test:

As you can see, the call-to-action 2 had a higher reply rate than the call-to-action 1. Since we set our minimum detectable effect at 30% of our baseline (35%), it means the difference between the two calls-to-action exceeded the required value.

All that remains is to iterate around your learning and keep experimenting to continually improve your email campaigns!

9 A/B testing ideas

Email subject line

The subject line is the first thing recipients see, so you need to make it count.

You can test different lengths, word orders, and personalization techniques.

For example, compare "20% off sitewide, this week only!" with "{{first_name}}, we’re giving you 20% off this week!"

Email sender name

Experiment with using a real person's name versus your company name.

For instance, we might test "Richard from Amplemarket" against just "Amplemarket Team" to see which gets more opens.

Email formatting and body

Vary your email content by testing different call-to-action placements, wording, and email structure.

For example, see if moving your CTA higher up in the email makes a difference in clicks, or whether longer or shorter paragraphs result in better engagement.

Preview text

The email preview text complements your subject line. You can test different approaches like asking a question or creating urgency to see what encourages more opens.

Interactive content

Readers are sometimes reluctant to click on hard CTAs, so look for other ways to encourage engagement.

Try adding elements like surveys or quizzes to your emails and test if they lead to higher click-through rates.

Offers and incentives

Experiment with different types of offers - such as percentage discounts versus fixed amounts or gifts - to see what drives more conversions.

Visuals

Test whether including images or keeping your email text-only works better.

You can also try different styles, like using drawings versus screenshots, to see which resonates more.

Copy

Test the length, tone, and personalization of your email copy. See if a positive, concise message outperforms a detailed, formal one.

Calls to Action

Your CTA is crucial. Test different button styles, placements, and copy to see what drives more clicks.

A/B testing email metrics to measure

The way you measure the success will depend on the goal of your test, but generally speaking, these are the most important metrics to keep an eye on:

- Open rate - measures the percentage of people that opened your email. If you are A/B testing subject lines, this is the metric you should track

- Reply rate - measures the percentage of people that replied to your email. You should track this metric in case you are A/B testing changes to the body of your email (i.e. value proposition, call-to-action, tone of voice, etc.).

- Conversion or click-through rate - measures the percentage of people that converted to a given goal (i.e. scheduling a call, signing up for a free trial, clicking on a link, etc...). The variables that have the most impact on this metric are CTAs, copy, offers, and other messaging factors.

A/B testing for landing pages

You don't have to stop at your emails!

A/B testing your landing pages can also lead to better results to support your pipeline generation. Test different headlines, images, and CTA placements to find what converts the most visitors.

Tips for running effective A/B tests

Define your hypothesis

Before you start, have a clear hypothesis. For example, "I believe personalizing the subject line will increase open rates."

This helps you define the focus of your test to you can work towards actionable results.

Change only one variable at a time

If you’re testing a new call-to-action and a new subject line at the same time you won’t be able to decouple the effects your results! You should only test one variable at a time so you can draw clear conclusions from your experiments.

That means you may have to run a succession of tests until you find the perfect email formula. In that case, make sure you prioritize tests according to their potential impact and how easy they are to implement.

Learn from your results

Not every test will succeed, and that’s okay! Use the insights from each test to refine your strategies and keep testing new ideas.

Don’t stop testing

One mistake a lot of marketers make is assuming tests are one-off experiments.

In reality, audience interests and behaviors change over time, so what worked once might not a year later! Keep running regular tests and never let your campaigns be led by assumption.

A/B testing for constant improvement

A/B testing is one of the simplest and most effective ways to get better results out of your email marketing campaigns.

By continually testing and refining your marketing emails, you can achieve better engagement and drive more conversions. Control your tests, stay consistent, and always be ready to learn and adapt.

Happy testing!

Subscribe to Amplemarket Blog

Sales tips, email resources, marketing content, and more.